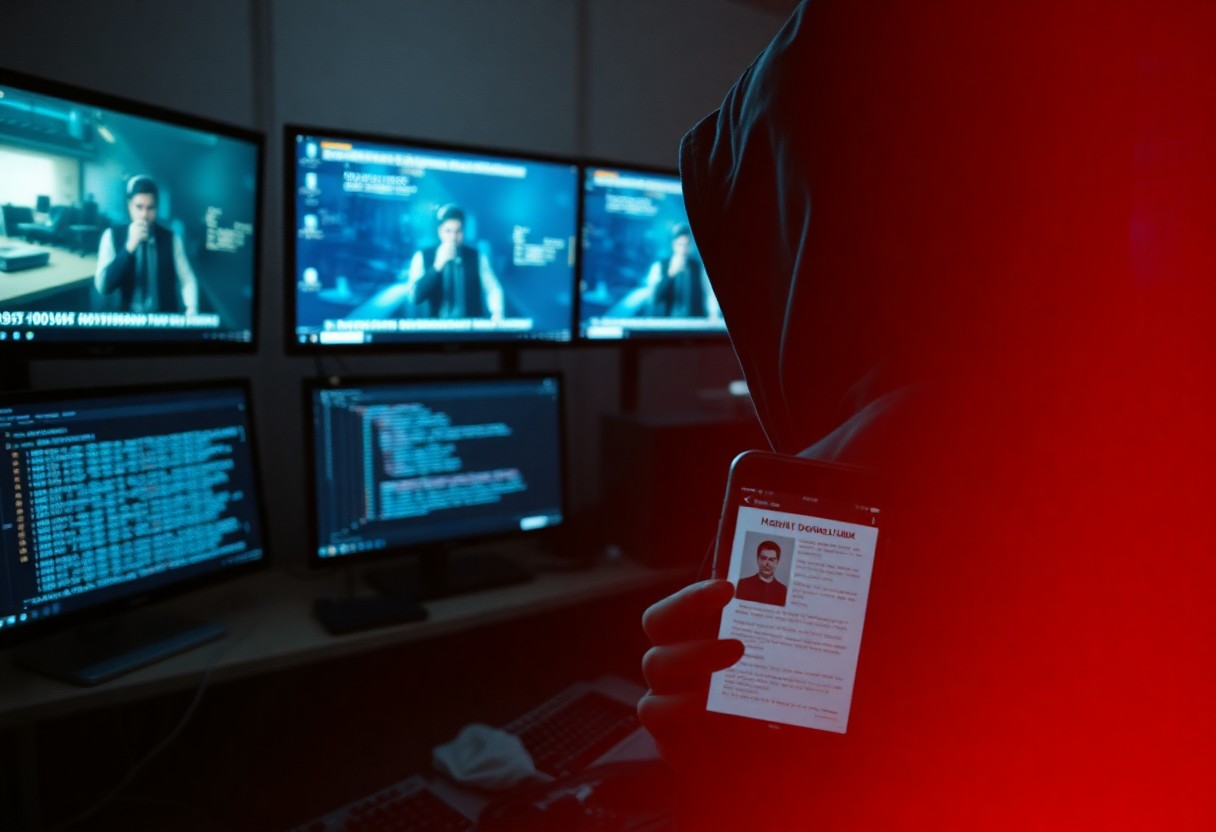

Over the past few years, you have likely encountered the rise of deepfake technology, which poses a significant threat in cyber fraud and identity exploitation. As AI continues to enhance the sophistication of digital impersonations, your personal and financial information is more vulnerable than ever. Understanding how these deepfake-based scams operate and recognizing their potential dangers can empower you to protect your identity and most sensitive assets in this rapidly evolving landscape.

As you investigate deeper into deepfake technology, it’s important to grasp its multi-faceted nature and implications. Deepfakes represent realistic, manipulated media generated by artificial intelligence, which can alter or replace someone’s likeness in audio and video content. This allows for a myriad of applications, both benign and malicious, highlighting the pressing need for awareness as the technology evolves.

Deepfakes utilize deep learning algorithms, specifically generative adversarial networks (GANs), to create hyper-realistic simulations of people’s voices and appearances. By training on extensive datasets of images and audio, these algorithms can produce convincing fakes that deceive viewers into believing the manipulated content is authentic. The sophistication of this technology poses significant risks across various domains.

Initially, deepfake creation involved basic techniques, but advancements in artificial intelligence have propelled their sophistication. Early deepfakes relied on simple face-swapping technologies, while recent methods use GANs that learn and adapt, resulting in more believable outcomes. The introduction of facial recognition and machine learning has further enhanced the quality, making it tougher for even experts to discern the fake from the real.

The evolution of deepfake techniques has been rapid, reflecting a growing sophistication in AI. The first recognizable deepfakes emerged around 2017, but what was once a rudimentary imitation has transformed into a highly refined art form. Newer iterations incorporate face replacement algorithms that can seamlessly blend a target’s face with actors in videos, often fooling viewers in real-time scenarios. Research has shown how realistic voice synthesis can accompany these visuals, amplifying the potential for identity exploits. Cases have surfaced involving fake celebrities and public figures, illustrating how quickly this technology can be weaponized for misinformation and fraud, emphasizing the urgent need for robust detection measures.

Cybercriminals are increasingly leveraging deepfake technology, employing sophisticated tactics that target individuals and institutions alike. These strategies encompass a variety of approaches, such as impersonation, financial scams, and identity theft, all designed to exploit trust and manipulate perceptions. By distorting reality, these tactics pose significant threats, enabling fraudsters to bypass traditional verification systems.

You may be astonished to learn how deepfakes facilitate impersonation and voice cloning, allowing cybercriminals to mimic the appearance and speech of trusted individuals. For instance, fraudsters can craft realistic videos or audio clips of executives or family members to authorize transactions or gain sensitive information. This manipulation effectively breaks down the barriers of identification, putting you and your assets at significant risk.

Deepfake technology fuels a surge in financial scams and identity theft, where criminals create convincing narratives that lead you to relinquish personal information or funds. For instance, scammers can generate credible invoices through deepfaked identities of legitimate businesses, tricking you into making payments to fraudulent accounts. Victims often report losses in the thousands, demonstrating the severe repercussions of falling victim to these schemes.

In the wake of these deepfake-driven financial scams, the statistics are alarming. According to a report from the Federal Trade Commission, losses due to identity theft related to deepfake fraud have seen a staggering rise of over 200% in the past year alone. The emotional toll compounds the financial damage, as victims often feel violated and betrayed. It’s important to stay vigilant, verify communications independently, and remain skeptical of unsolicited requests for sensitive information. Protecting yourself involves not just technology but also a keen awareness of the tactics employed by fraudsters.

Your understanding of existing laws surrounding deepfake technology is imperative, as regulations vary significantly by jurisdiction. In the United States, states like California and Texas have enacted laws specifically targeting deepfake misuse, particularly in relation to consent and impersonation. However, there remains a lack of comprehensive federal legislation, leaving gaps in accountability. The European Union is currently drafting a regulatory framework that addresses these technologies, which reflects an urgent need for legal structures that keep pace with rapid technological advancements.

As deepfakes proliferate, ethical concerns escalates regarding misinformation and trust in media. You might feel a growing unease as individuals struggle to discern reality from fabrication, undermining public trust in authentic content. Recent surveys indicate that approximately 85% of people express concern about the impact of deepfakes on society, highlighting the urgent need for ethical guidelines in content creation.

The pervasive fear of misinformation shapes public perception, where the integrity of both media and personal communication is challenged. When individuals encounter realistic deepfake videos, emotional reactions can skew their judgment, leading to potential harm in personal relationships or professional reputations. Vast misinformation campaigns leveraging deepfake technology pose risks not just to individuals, but to democratic processes as well, amplifying societal divides. In this context, fostering critical media literacy becomes crucial; you are encouraged to question the authenticity of what you see and be aware of the risks posed by this rapidly evolving technology. Such awareness can empower you to better navigate and scrutinize the landscape of content consumption in the AI era.

As deepfake technology evolves, implementing effective detection and prevention strategies becomes vital for safeguarding against cyber fraud. Utilizing AI-based tools and improving digital literacy can significantly reduce the risks associated with identity exploits. Establishing strict verification protocols, particularly in sensitive transactions, will also bolster defenses. Staying informed about emerging threats is critical for both individuals and organizations to mitigate potential damage.

Innovations in deepfake detection are paving the way for enhanced security measures. New algorithms utilizing machine learning analyze inconsistencies in videos, such as unnatural blinking patterns or audio mismatches, to identify manipulated content. Companies like Deeptrace and Sensity are at the forefront, providing real-time detection solutions that can help you identify deepfakes before they cause harm.

Implementing best practices forms the backbone of a robust defense against deepfake threats. You should adopt a multi-layered approach that includes public awareness campaigns and stringent verification processes, especially for high-stakes communications. Utilizing watermarking and digital signatures can also help authenticate media, while training employees to recognize potential deepfakes can significantly reduce exposure to fraud.

Creating a culture of awareness is vital; conducting regular training sessions about deepfake technology and its risks can empower your staff to spot potential threats. Implementing a verification process for critical communications, such as video calls and financial transactions, helps ensure authenticity. Additionally, leveraging AI-based detection tools can provide an extra layer of security, allowing you to detect and respond to deepfake threats effectively. By embracing both technology and training, you will bolster your defenses against the challenges posed by deepfake technology.

Examples of deepfake cyber fraud demonstrate the alarming potential of this technology. Notable case studies reveal how deepfakes can lead to significant financial losses and identity theft.

High-profile incidents of deepfake fraud highlight the sophistication and effectiveness of these cyber attacks. In 2020, a deepfake audio impersonating a CEO led to a fraudulent wire transfer of €220,000, showcasing the unprecedented realism that deepfake technology can achieve.

The ramifications of deepfake cyber fraud extend beyond immediate financial loss. Victims may face significant emotional distress, damage to reputation, and long-term financial struggles. Organizations bear the costs of recovery, legal fees, and potential regulatory scrutiny, which can culminate in a lasting impact on their credibility.

Victims of deepfake cyber fraud often experience not just financial losses but also severe reputational damage and emotional trauma. The repercussions for organizations can be multiplied, affecting stakeholder trust and market positioning. For instance, companies may invest heavily in cybersecurity measures following an attack, diverting resources from growth initiatives. The ordeal can also attract media attention, resulting in public relations crises that further undermine trust. In the end, the multifaceted impact signifies that deepfake technology poses a persistent threat that requires heightened awareness and preparedness.

As deepfake technology evolves, its implications for cybersecurity will become even more significant. You can expect a dual-sided progression, where cybercriminals leverage sophisticated deepfakes for fraud, while defensive technologies work tirelessly to counter these threats. Innovation in detection and prevention will remain pivotal as organizations assess their vulnerabilities against emerging tactics.

Increasing accessibility of deepfake creation tools will lead to a surge in their use among cybercriminals, while the development of advanced detection algorithms will emerge as a key focus for cybersecurity experts. As industries adapt, you’ll see a rise in mandatory regulations aimed at mitigating the risks associated with deepfake technology, ensuring accountability for misuse.

AI will fundamentally transform the landscape of cybersecurity, especially in countering deepfakes. Enhanced machine learning models and pattern recognition systems will enable quicker identification and remediation of potential threats arising from deepfake-generated content.

The integration of AI into cybersecurity strategies provides a predictive ability, allowing you to anticipate anomalies and phishing attempts before they escalate. For instance, recent advancements in deep learning have led to AI-driven detection systems capable of recognizing the subtle inconsistencies within deepfake videos, effectively flagging them for further scrutiny. AI tools can analyze voice and facial movements for authenticity, enabling organizations to respond swiftly to potential identity exploitation. As these technologies improve, your organization’s resilience against deepfake-related threats will increase, reinforcing trust in digital communications and transactions.

Presently, as technology evolves, you must remain vigilant against deepfake-based cyber fraud and identity exploitation. Understanding the methods used by cybercriminals can empower you to safeguard your personal and financial information. By implementing robust security measures and staying informed about emerging threats, you can mitigate risks associated with these advanced forms of deception. It is necessary to cultivate a proactive approach to protect yourself in this AI-driven landscape.

A: Deepfake technology allows malicious actors to create realistic audio and video impersonations of individuals. This can lead to various cyber fraud attempts, such as identity theft, financial scams, and misinformation campaigns. Attackers may impersonate executives in video calls to authorize fraudulent transactions or create fake news clips to damage reputations and manipulate public perception.

A: To mitigate risks, individuals and organizations should implement multi-factor authentication and conduct identity verification processes that go beyond video evidence. Training employees to recognize signs of deepfakes, utilizing deepfake detection tools, and staying updated on the latest security practices can also help safeguard against identity exploitation.

A: Perpetrators of deepfake-based cyber fraud may face criminal charges such as fraud, identity theft, or defamation, depending on the jurisdiction. Victims can also pursue civil action for damages. Laws are evolving to address the unique challenges posed by deepfakes, and many regions are introducing legislation to specifically target the harmful use of this technology.